Issue #55

Contents

Tool review: Is your OpenAPI file lying?

Interesting content for the week

Tool updates: Mintlify agent

FeedBack & share

Upcoming conferences

My services: API governance consulting

Tool Review: Is Your OpenAPI File Lying? Stop API Drift and Improve AI Agent Readiness with Redocly Respect and Arazzo

We are in the midst of an agentic transformation in the enterprise.This agentic revolution rekindles a focus on providing high quality OpenAPI description files as we onboard agents as users of our APIs. And a big challenge to providing good OpenAPI descriptions is API drift. API drift occurs when your API implementation gradually diverges from its API description. It's insidious because it happens incrementally through everyday development activities.

A developer adds a new query parameter during a hotfix. Another team member changes a response field from optional to required. Someone modifies error codes without updating the docs. Each change seems minor, but collectively they create a dangerous gap between what your OpenAPI description promises and what your API actually delivers.

The consequences ripple outward. API consumers build integrations based on outdated documentation, leading to runtime failures. Your autogenerated SDKs become unreliable. Mock servers and testing environments no longer reflect production behaviour. Teams lose confidence in the description itself, abandoning it as a source of truth. This confusion does not help AI agents that consume the API descriptions.

The root cause is often organisational rather than technical. In fast-paced development environments, keeping descriptions synchronised with implementation requires discipline that competes with feature delivery pressures. Without automated verification, drift becomes inevitable.

Provider-driven contract testing to the rescue

One way to combat OpenAPI drift is provider-driven contract (PDC) testing. This is a testing approach where the API provider (the team that builds the API) defines and maintains the contract that specifies how their API behaves — its endpoints, request/response formats, headers, status codes, etc. In this model, the OpenAPI contract (description file) is the absolute source of truth. The goal of the test is to ensure the provider API fulfils every promise articulated in the published description. This approach validates the API’s complete published contract, regardless of whether a specific consumer uses all fields or paths. This methodology is optimal for public or external APIs where the publisher must maintain absolute consistency for an unknown number of consumers. Tools in this category, such as Redocly Respect, test the live API against the rigid schema defined in the OpenAPI description.

PDC is distinct from consumer-driven contract (CDC) testing, which is used by tools like Pact. In CDC, the contract is defined by the expectations of the consumer, not the provider’s entire schema. CDC tests are not designed to expose general bugs in the provider but to prevent breaking the consumer's integration.

As a reminder, here are some differences between PDC and CDC:

Aspect | Provider-Driven Contract testing | Consumer-Driven Contract testing |

|---|---|---|

Contract owned by | API provider | API consumer(s) |

Primary purpose | Provider ensures stability of published contract | Consumers define their usage expectations |

Typical tools | OpenAPI, Postman Collections | Pact, Spring Cloud Contract |

Who validates? | Provider validates against contract | Provider validates against multiple consumer contracts |

Ideal when | One-to-many API, standardised contract | Many-to-one API, diverse consumer needs |

There are other techniques to address OpenAPI drift, such as using:

Language-Oriented Approach to API Development (I’ll use the acronym, LOAAD, for this) tools like TypeSpec

Using a design-first approach, and generating code from OpenAPI

Code-first approach, and generating OpenAPI from code

Runtime API contract validation in a gateway or proxy

But I wouldn't go into these here (I discuss them in my book). However, I’ll mention that the decision to be made here is not a “pick one“ decision. Depending your API delivery workflow and constraints, you may decide to pick multiple controls for effective drift prevention.

Note: I call this multi-control approach out because there are advantages to combining controls. For example, even if you are using a LOAAD tool like TypeSpec, combing this control with PDC based on Arazzo encourages the production of Arazzo files as part of your workflow. This is because I see Arazzo workflows as valuable artifact of your API delivery process for the purpose of agent-readiness. I talk more about this later.

For this post, let's look at how to run PDC in Redocly Respect. Redocly’s SaaS platform offers a feature that allows scheduled execution of Arazzo workflow files, and the provides monitoring (alerts, history, graphs) of the results.

Respect's approach to PDC

Redocly Respect, part of the Redocly's API tool suite, is a PDC tool that is bundled with Redocly's open source CLI. As a PDC testing tool, it uses an Arazzo workflow file as a description of test steps. Arazzo is a workflow specification layered on OpenAPI, enabling sequences of API calls that represent business processes rather than isolated endpoints. Respect sends API requests to a live API server, and validates that the response matches the expectations. Let's look at how it works.

Imagine I have an Arazzo file, called myworkflow.arazzo.yaml. The file defines a workflow ID, listAndFetchUser, that has two steps: listUsers and getOneUser. These steps call an OpenAPI file, myapi.openapi.yaml with operations GetUserList and GetUserActivity respectively.

Here is a simplified example workflow file:

arazzo: 1.0.1

...

sourceDescriptions:

- name: myapi

type: openapi

url: myapi.openapi.yaml

workflows:

- workflowId: listAndFetchUser

...

steps:

- stepId: listUsers

operationId: myapi.GetUserList

outputs:

id: $response.body#/0/id

- stepId: getOneUser

operationId: myapi.GetUserActivity

parameters:

- name: id

in: path

value: $steps.listUsers.outputs.idWhen I run Redocly Respect, it runs a PDC test and validates the status code, content-type and schema of the response as shown below:

$ redocly respect myworkflow.arazzo.yaml

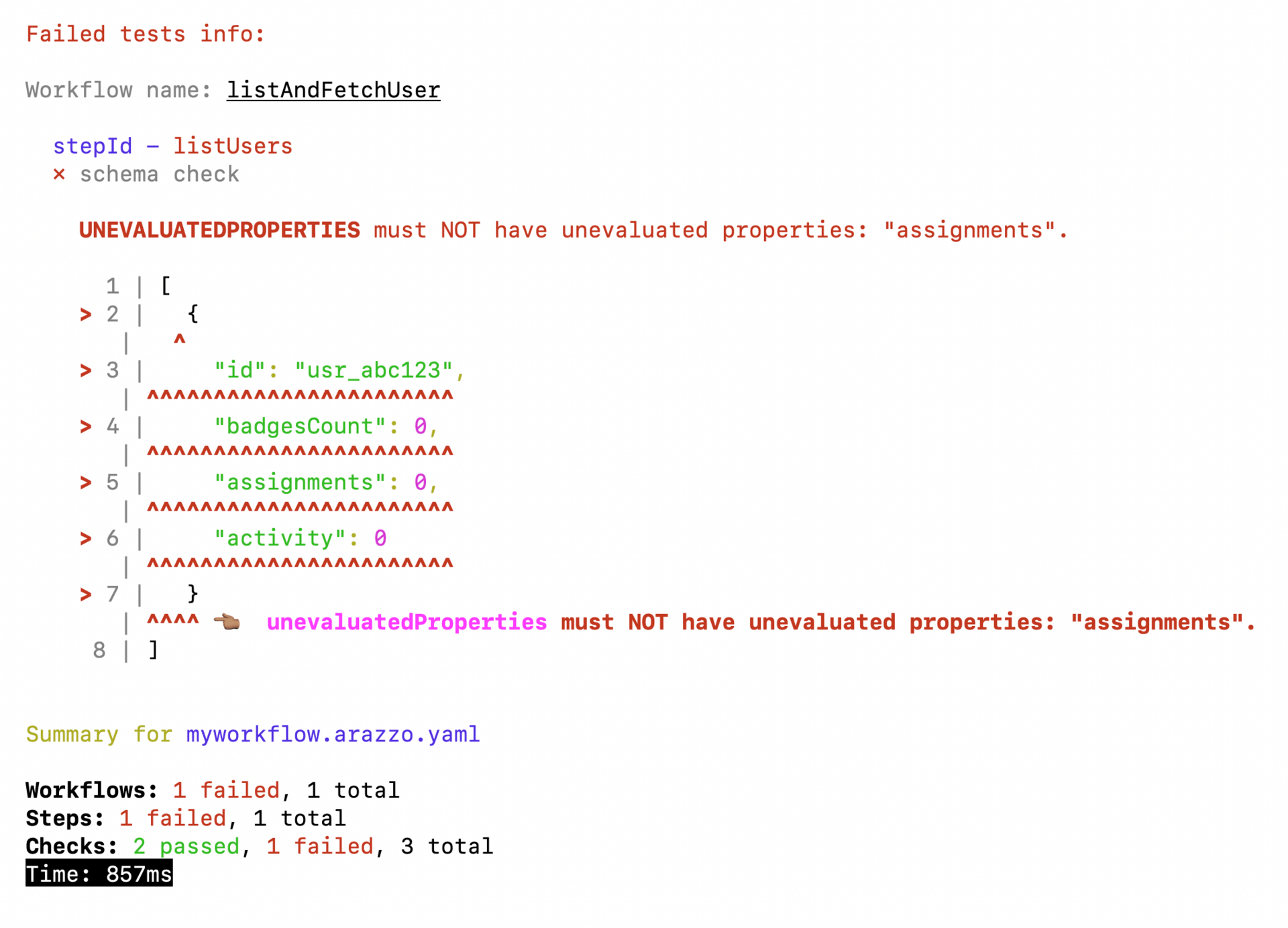

Now, let's introduce drift. Imagine a field property, called 'assignments', in the OpenAPI file was marked as string rather than integer (which is what the API returns). Will Respect catch this? Yes, running the test will:

And this works, even though additionalProperties: false (or unevaluatedProperties: false) is not set on the myapi.openapi.yaml file! This is so handy for catching drift. But what if the assignments field was removed from the OpenAPI file altogether? Would Respect catch that? Yes, it would! Running the test shows Respect complaining about the assignments field:

I suspect that the way Respect works is that it performs schema validation using the Ajv library in strict mode, and so validates actual response payload structure even when schemas are permissive. But I have not verified this.

Before I talk about the advantages and disadvantages I see with using Redocly Respect, let me mention one thing I have left out. Redocly offers a monitoring feature that allows you run workflows periodically or during builds. It’s a useful control mechanism to ensure that drift does not creep in over time.

Advantages of PDC with Respect

PDC at its core, validates an API contract. But there are two advantages I can see with Respect's approach.

Vendor-neutral, shareable workflow documentation: It encourages the documentation of business processes using a vendor-neutral standard - Arazzo. A business process (or a series of processes) encapsulate a business capability, which is a strategic asset for a business. The workflow description is valuable living documentation that can provide deterministic consumption recipes, support SDK generation and enable agentic API consumption. This workflow can be shared with and worked on by different teams and stakeholders, using this as a bases for end-to-end contract testing and validating its correctness makes sense. But even more importantly, the Arazzo workflow file can be provided to AI agents, to provide them with a great API consumer agent experience. At Platform Summit 2025, Frank Kilcommins defined this as:

“API consumer agent experience is the ease and reliability with which agents can discover, reason about, and safely execute API operations - enabled by rich machine-readable documentation, clear boundaries, and predictable runtime behaviours“ - Frank KilcomminsAs Frank also mentioned in the talk, it is important to codify intent-based use cases and workflows. Using Arazzo and Redocly Respect definitely helps with this.

Note: By the way, Frank’s excellent talk at Platform Summit 2025, “Beyond the Agent Hype: Why Arazzo and Workflows Matter for Predictable AI“ is worth looking out for when it is published on the Nordic APIs channel. Highly recommend.Catch drift and incomplete schema descriptions even without strict schema validation: When

additionalProperties: falseorunevaluatedProperties: false(which is the default case), OpenAPI does permit the server to send extra properties not defined in the OpenAPI document. This is not technically drift. But it is helpful in many cases (especially for security) to have the OpenAPI define all expected properties (so we can spot anomalies). The reality is that many OpenAPI files do not haveadditionalProperties: trueset on schemas, so teams may need to do some retroactive work to add this, (such as using OpenAPI overlays if you like using that or other tools). But Respect can catch missing fields even without these strict schema validation directives, which is a big win.Workflow focused: In previous projects where I have had to deal with OpenAPI drift cross may services, I found that it impractical to try and fix the drift in all OpenAPI files at once. Instead, I focused on the key business processes that were most important to the business, and created workflows for these. This enabled me deliver high value quickly. Respect fits this use case well.

Downsides of PDC with Respect

The drawbacks of PDC testing in general are described elsewhere and also in my book, but I will mention that:

It has all the downsides of E2E testing: E2E testing is inherently brittle and slow, if not managed properly. PDC tests are no different, so be tactical about where you apply it.

Arazzo required: Respect requires an Arazzo workflow file to define the test steps. This is an additional artefact that teams need to create and maintain. While I recommend Arazzo, I also understand that not all teams have adopted it yet. However, as I mentioned, I think Arazzo is worth the overhead for complex, agent-friendly APIs.

Workflow focused: Yes, being workflow focused is an advantage for Respect, but it can also be a disadvantage in some scenarios. If you want to test all the individual operations in one OpenAPI file, you'll have to create an Arazzo file that references each endpoint. Yes, easy to do with genAI, but this is still an overhead. Rather than creating a workflow that calls all operations, you may need to consider a property-based, single OpenAPI testing tool, like Schemathesis.

Alternative tools

PDC is one control teams can adopt in their API delivery workflow to combat OpenAPI drift, but these days probably the most effective control type would be to use language-oriented API development tools like TypeSpec (which I have mentioned previously) for your API development. However, if PDC is a control technique you use in your API delivery workflow, here are some alternative tools to Respect:

Postman Collections: You can create your workflows as Postman collections and also schedule regular execution of the collection in your CI/CD pipeline or using scheduled collection runs to detect drift. If you are going down this path, consider the licence costs for your team. Also, Postman encourages you to store and collaborate on collections in their cloud, and some teams may not be comfortable with this. But most importantly, Postman collections are not vendor-neutral like Arazzo.

Specmatic: One API testing tool rising in popularity is Specmatic. A feature it has, Specmatic Studio, offers a way to visually create workflows, defined in Arazzo, and execute them. It enables you execute them and provides drift reports. Of course it supports contract testing on the command line as well. One to watch.

Schemathesis: Schemathesis can run property-based tests on APIs and perform OpenAPI contract validation. Using its stateful testing feature and OpenAPI links is one way to simulate workflows to a limited extent. But setting up OpenAPI links can be tedious, and not very popular. Also, OpenAPI links work within a single file, not across files like Arazzo.

Others: Numerous tools exist for API contract testing, such as Dredd, RestAssured (with example here), and Microcks. These tools, at time of writing, do not have native support for PDC testing with Arazzo.

I would like to do an analysis of how these tools compare to Respect for the PDC usecase along the following axes: workflow support, vendor neutral workflow definition, schema validation strictness, and drift detection reporting. Perhaps one for later, or possibly part of my presentation in my upcoming talk on API drift in API Conference Berlin.

Conclusion

I like Redocly Respect. In short, I like the Redocly CLI, full stop, and I recommend it to my clients. For the use case of PDC testing to validate an OpenAPI file, I give Redocly Respect 5/5. It is easy to use, and it can catch OpenAPI drift effectively when used as a PDC pattern implementation. And I look forward to more API tool vendors following Redocly’s lead with Arazzo based API testing tools.

Redocly Respect gets 5/5 rating.

If you would like to give Respect a try, Redocly does offer a getting-started guide to Respect, which you can find here.

Interesting Content for the Week

Some of these news links are a bit old (but a still useful). We prepared them two weeks go, but I was busy with getting ready for Platform Summit 2025, and did’t send them out. Sorry for the delay!

Runtime AI Governance

The Agentic Sandbox: Infrastructure for Safe AI: “Jentic’s agentic sandbox lets enterprises test and validate AI agents safely by simulating real APIs and data systems. It turns experimentation into audited, reusable workflows so AI can move from testing to production with structure, trust, and control.“ - Frank Kilcommins

How to make your APIs ready for AI agents: Dhayalan Subramanian outlines a comprehensive strategy for making APIs "agent-friendly" by evolving them from interface’s designed for human developers to structured tools consumable by autonomous AI agents.

AI is About to Make Your API Sprawl 10x Worse: “…instead of AI making existing tools better, we're getting entirely new categories of AI-specific API tools…” - Shane O'Connor shares a solution to avoiding API sprawl as organisations adopt AI.

Releasing an MCP server: lessons learned: “…agents perform better with fewer tools, don’t map API endpoints one-to-one, release gradually, and send the AI natural language.” - Bill Doerrfeld

Trusted AI at Scale: Secure Governance and Scalable Management for Your AI Models (Video): Jfrog explains the fundamental concept of tool calling for LLMs, which is essential for understanding AI agents.

Building the future of agents with Claude (Video): Anthropic’s Alex Albert, Brad Abrams and Katelyn Lesse discuss the evolution of building agents with Claude, the latest Claude Developer Platform features, and why agents perform best when developers “unhobble” their model with tools.

API Production Governance

Exploring the Future of API Security and Identity at Platform Summit 2025: Curity shares the key themes for the Future of API Security and Identity to be discussed at the Platform Summit 2025.

Building an MCP Gateway with Apigee API Gateway: Christian Posta shows how to use Apigee API gateway to implement governance, security, and authorisation around MCP servers.

Building Reliable API Workflows with Arazzo: Rod Rivera shows how Arazzo elevates API documentation from a description of individual operations to a comprehensive, executable definition of underlying business logic, which is critical for scaling reliable agentic systems.

How to gracefully handle resource variants in your REST API: Bruce Hill analyses four distinct ways of managing resources variants with the goal of finding the best balance between type clarity, SDK usability (ergonomics), and long-term maintainability.

Universal Tool Calling Protocol (UTCP): a scalable standard designed to facilitate the direct discovery and calling of tools by AI agents and applications, utilising the tools' native protocols without the need for intermediary wrapper servers."

Tool Updates

Mintlify agent helps you keep your API docs up to date. You tell the agent what needs updating, and provide any PRs, Slack threads or links it needs for context. Based on your API docs structure and writing style, it drafts the changes and raises a PR with the changes for you. You can then review and merge it. It can even generate a change log for you. See an example on YouTube.

What do you think of this newsletter issue?

I appreciate your feedback. If you find my newsletter useful, please forward and share it with a friend.

Upcoming API Conferences

API Conference Berlin: Theme - The Conference for Web APIs, API Design & Management, Date - October 20 - 22, 2025. Register to get your tickets.

My Services: API Governance Consulting

Is poor API governance slowing down your delivery? Do you experience API sprawl, API drift and poor API developer satisfaction? I'll provide expert guidance and a tailored roadmap to transform your API practices. |

Ikenna® Delivery Assessment → Identify your biggest API delivery pain points. Ikenna® Delivery Canvas (IDC) & API Transformation Plan → Get a unified, data-driven view of your API delivery and governance process. Ikenna® Improvement Cycles → Instil a culture of scientific, measurable progress towards API governance. Ikenna® Governance Team Model → Set up and improve your governance team to sustain progress. Ikenna® Delivery Automation Guidance → Reduce lead time and improve API quality through automation |

Schedule a consultation by emailing: [email protected]. |